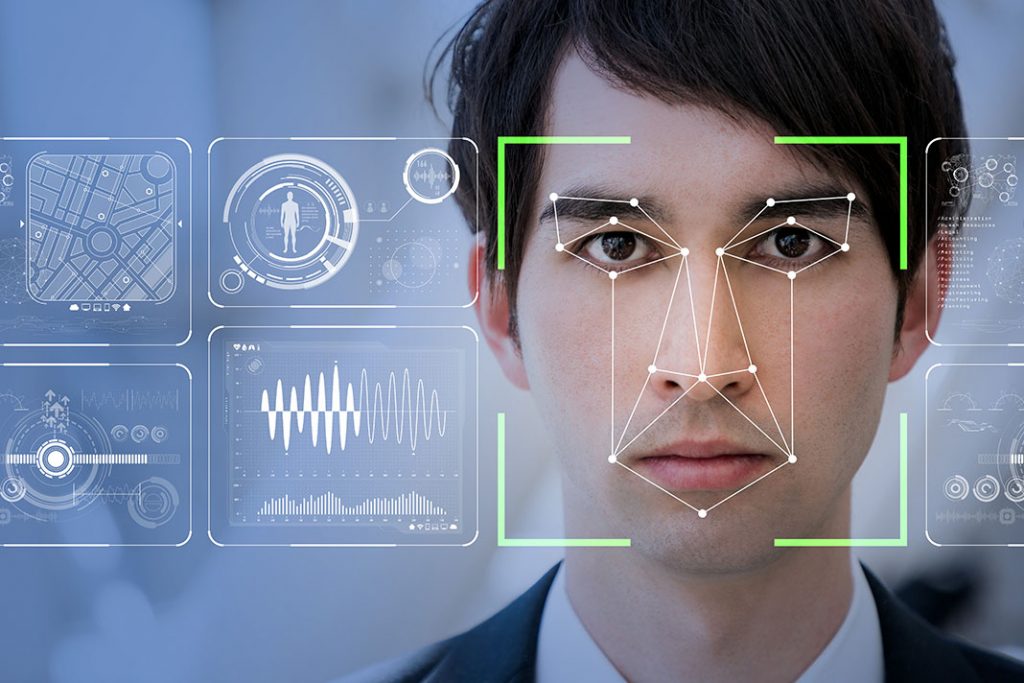

A great deal of work lately has been done by some pretty big players to hone Facial Recognition Technology. Great strides have been made to perfect the accuracy of the technology, but it still does not have enough confidence to be as true as one needs. For instance in law enforcement, reliance is critical if it is needed to bring a conviction. The technology needs to be thoroughly tested in court to pass this test. As of now there is a lack of tests in court.

The essences are there. Large data bases of photographs are needed and the computing power to sort through those photos to be useful. The issues can be easily resolved with advancements in technology and large data bases assembled through sources like driver’s license records. All of those are a mark of success needed for AI.

And there is a rub. The technology lacks the capability of reliably in order to accurately match the faces. In a recent Forbes article the issues become painfully obvious (Forbes article). Trying to resolve the issue has those trying to calibrate the system stepping into a social minefield wrought with issues. Some of those trying to perfect the technology are tripping those mines and are having to deal with the public relations fall out. Firms like Google, who normally would not face this kind of grilling are pretty good at playing in a mine field. They are now having to stick handle through the debris (New York Daily News article).

The use of facial recognition technology in a case in Cardiff, Wales, UK, was closely watched as one of the first to test the technology in court. The issue was not “have you got the right guy”, but “have you violated my civil rights”? The case brought attention from various groups with vested interests in watching the proceedings. This is just one other mine field issue.

With the more frequent occurrences of all of these issues comes more pressure on legislators to intervene. The issue of privacy crepes in from recognition for security purposes to recognition for commercial exploitation.

Now the issue of commercial exploitation really draws the legislators attention. To keep in front, groups such as FaceFirst are working with legislators to draft laws to deal with the technology. Others including Amazon are joining in with legislators to craft laws to find the needed balance.

The technology will be perfected so it becomes reliable regardless of age, sex or race. As of right now, it’s a security feature on our smartphones and we seem to accept its presence. We seem to accept it going through an airport. When it monitors our activity outside of that, we may take issue. If it impinges on our privacy, we will take issue. Here is where legislation needs to sort things out and caution must be taken.